Theory of Mind as a Mediator of Reasoning and Facial Emotion Recognition: Findings from 200 Healthy People

Article information

Abstract

Objective

It was proposed that the ability to recognize facial emotions is closely related to complex neurocognitive processes and/or skills related to theory of mind (ToM). This study examines whether ToM skills mediate the relationship between higher neurocognitive functions, such as reasoning ability, and facial emotion recognition.

Methods

A total of 200 healthy subjects (101 males, 99 females) were recruited. Facial emotion recognition was measured through the use of 64 facial emotional stimuli that were selected from photographs from the Korean Facial Expressions of Emotion (KOFEE). Participants were requested to complete the Theory of Mind Picture Stories task and Standard Progressive Matrices (SPM).

Results

Multiple regression analysis showed that the SPM score (t=3.19, p=0.002, β=0.22) and the overall ToM score (t=2.56, p=0.011, β=0.18) were primarily associated with a total hit rate (%) of the emotion recognition task. Hierarchical regression analysis through a three-step mediation model showed that ToM may partially mediate the relationship between SPM and performance on facial emotion recognition.

Conclusion

These findings imply that higher neurocognitive functioning, inclusive of reasoning, may not only directly contribute towards facial emotion recognition but also influence ToM, which in turn, influences facial emotion recognition. These findings are particularly true for healthy young people.

INTRODUCTION

Facial emotion recognition abilities are crucial for the development of successful relationships and healthy psychological functioning.1,2,3 The most widely used measure of facial emotion recognition is the emotion-labeling test, which requires subjects to choose an emotional category that best describes the emotional states of the person in the photograph shown. This test requires complex neurocognitive processes. According to previous studies on healthy controls4,5 and clinical patients4,6,7,8 with brain disorders such as schizophrenia, these neurocognitive processes may include the visual scanning of facial features and vigilance, which facilitates readiness to respond to relevant stimuli;6 working memory, which facilitates the retention of information on line long enough for the test-taker to compare the facial photograph with the various labels for emotional categories;7,8 semantic memory, used for encoding and retrieving emotional labels;9 concept formation and cognitive flexibility, which are executive functions required for choosing the appropriate emotion label and changing that label during each emotion recognition trial;7 and the reasoning function of abstraction so as to recognize and distinguish between different facial emotional stimuli.5

In addition to neurocognition, theory of mind-the ability to make inferences about the mental states of others10-may be relevant to facial emotion recognition. Individuals do not interpret the emotional expressions of others on the basis of surface meaning alone, but instead incorporate intentions, knowledge, and beliefs. The integration of all these factors results in unique interpretations of events. According to Bryson et al.'s proposal regarding the contribution of ToM towards facial emotion recognition, in order to label individuals' internal experiences on the basis of behavioral signs occurring as a result of their internal states, subjects should have created a concept of internal space for themselves and others, which is a core component of ToM. Thus, the facial emotion recognition test should also assess one's ability to acknowledge the existence of others' internal states. Recently, five core constructs for social and emotional behavior were proposed to have distincttive and interactive functions:11 1) the acquisition of social-affective values and responses, 2) recognition of social-affective stimuli, 3) embodied simulation or low-level mental state inference, 4) high-level mental state inference, and 5) context-sensitive regulation, which might operate independently or in various combinations. According to this model, to understand the meaning of facial emotions, not only the acquisition of the social-affective values, but also low-level simulation (low-level ToM) and higher-level ToM skills were needed. According to Goldman's (2005) view of the contribution of low-level simulation, the visual representation of a target's facial expression would induce an emotional experience within the subject via a simulation process; the subject can then classify his or her current emotional state and attribute it to the target.12 For the accurate attribution of the subject's emotional state to the target, the subject should differentiate himself or herself from others, which is a core component of ToM. Regarding the possible contribution of higher-level ToM skills,11 the meaning of a facial expression is determined by the context in which the former is displayed. For example, a smiling face may be an expression of unambiguous happiness or sarcasm, depending on the context. To accurately recognize a facial expression within the various contexts, a third-person perspective is needed, to integrate the context with the facial expression. Taking a third-person perspective is also a core component of ToM. In fact, though relatively few, some studies found that ToM skills were associated with facial emotion recognition among healthy subjects13 and clinical patients with schizophrenia,14 as well as autistic disorder.15

In addition, several studies have reported relations between neurocognition and ToM. More specifically, the important neurocognitive processes supporting ToM were found to be the executive functions of attention flexibility,16 inhibition of default information,16 and the updating of information in working memory16 among healthy adults16 and children17 as well as clinical patients, including those suffering from traumatic brain injury18 and schizophrenia.19,20

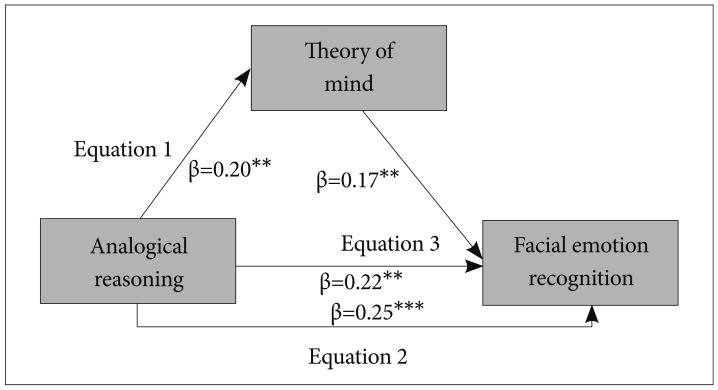

Therefore, ToM may play a mediating role in the relationship between higher neurocognitive functions, such as reasoning ability, and facial emotion recognition. This potential role of ToM is important to elucidate the pathogenetic mechanism of facial emotion recognition deficits in clinical populations, which showing the poor performances on the verbal and/or pictorial stories tasks of ToM skills. On the basis of previous findings, we hypothesized that reasoning ability and ToM were independent predictors of facial emotion recognition. More importantly, ToM may mediate the relationship between reasoning ability and facial emotion recognition in healthy people. We used facial photographs expressing basic emotions without any kind of contextual information, which may result in an ambiguous context for tapping the contribution of ToM skills towards performance on facial emotion recognition. To definitively conclude that ToM mediates the relationship between reasoning ability and facial emotion recognition, three conditions must be met: 1) the potential mediator (i.e., ToM) must be significantly associated with reasoning ability, 2) the facial emotion recognition variable must also be significantly associated with reasoning ability, and 3) the potential mediator (i.e., ToM) must still be associated with facial emotion recognition when included in a multiple regression model with reasoning ability (Figure 1). It is expected that the effect of reasoning ability will decrease in potency since the mediator (i.e., ToM) will explain a portion of the effect.

ToM mediates the relationship between reasoning ability and facial emotion recognition, three conditions must be met: equation 1) the potential mediator (i.e.,ToM) must be significantly associated with reasoning ability, equation 2) the facial emotion recognition variable must also be significantly associated with reasoning ability, and equation 3) the potential mediator (i.e., ToM) must still be associated with facial emotion recognition when included in a multiple regression model with reasoning ability. *p<0.05, **p<0.01,***p<0.001.

METHODS

Subjects

A total of 200 healthy subjects (101 males, 99 females) were recruited via an Internet job advertisement from August 2011 to July 2012. All subjects met the inclusion criterion of being between 15 and 25 years old. We selected this age group, as we will be conducting a similar study on people's peak age during the onset of psychiatric illness. Individuals with current or past psychiatric or neurological illness history, or traumatic brain injury, were excluded on the basis of a preliminary interview that was conducted telephonically. Written informed consent was obtained from all participants after the procedure had been fully explained to them. The study was reviewed and approved by the Institutional Review Board of the Severance Me-ntal Health Hospital. For participants below the age of 18 years, we also obtained informed consent from their parents. All participants' data were used for analysis.

Participants' mean age and years of education were 20.5 (2.0) and 13.4 (1.4), respectively.

Facial emotion recognition task

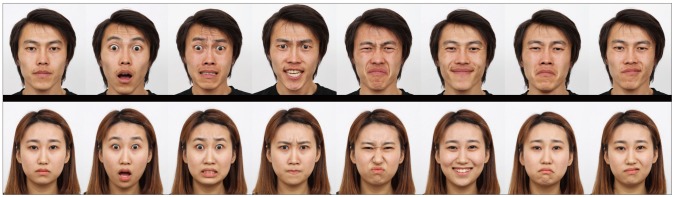

The 64 Korean facial stimuli were selected from photographs from the Korean Facial Expressions of Emotion (KOFEE) database (Figure 2).21 The stimuli were standardized in our laboratory, which is commonly utilized for research on emotion recognition in Korea. The KOFEE comprises photographs of seven universal facial expressions (happiness, disgust, anger, sadness, surprise, fear, and contempt) and neutral faces, which Korean models posed for. All facial expressions included in the KOFEE were coded through the use of Ekman and Friesen's Facial Action Coding System (FACS), which implies that each expression is a direct analog of expressions that actually occur in real life when people express their emotions facially.22 Sixty-four photographs (8 photographs of each emotion and 8 neutral faces) showed acceptable inter-rater agreement rate (>70%) in our previous standardization study21 (n=105, Korean college students). The selected photographs were adjusted by using the PhantaMorph 5.0 (Abrosoft, USA). The selected 64 photographs in the facial emotion recognition task were accompanied by a response sheet placed below the facial photograph. Subjects were asked to choose an emotion category that best describes the emotional states of the person in the photographs, based on the facial expression at their own pace. A forced choice format was provided for subjects to choose among the categories of "happiness," "disgust," "anger," "sadness," "surprise," "fear," "contempt," and "neutral" for the facial stimuli that were being presented. The construction of the task such that the emotional face and labels appeared on the same computer monitor until subjects made a choice did not necessitate more encoding and retrieval ability by semantic memory.7 In the present study, the total hit rate (%) was calculated as the percentage of correct responses for 64 facial photographs. The facial stimuli and response sheet were presented and the responses were recorded using by the Neuroguide (Applied Neuroscience, USA), E-Prime 2.0 (Psychology Software, USA), and Inquisit 3.0 (Milisecond software, USA).

Analogical reasoning task

The Standard Progressive Matrices (SPM)23 test was developed to measure the function of formal reasoning by analogy, which is closely associated with the executive functions of the prefrontal cortex.24 Formal reasoning requires the integration of relations as well as the ability to infer rules and form high-level abstractions, while other reasoning tests require the use of real-world knowledge as well as inhibition of salient distracters.25 The SPM test consists of 60 non-colored diagrammatic puzzles, each with a missing part, which subjects were asked to identify from several options. This test, which has been widely used in Korea,26 has been shown to have high reliability and validity among various cultural groups, regardless of the amount of time provided.27 The SPM score was calculated as the sum of correct answers.

Theory of mind task

The Theory of Mind Picture Stories task28 was developed by Brüne to measure the higher-order ToM ability. In this task, ToM is assessed using a series of novels of six cartoon picture stories; each story was made up of four picture cards. Subjects were requested to turn the cards that were presented face down in the same order (4-1-2-3) and rearrange them in a logical sequence of events. A sequencing score was allocated for each picture story. Two points were given if the first and last cards were in the correct sequence, and one point was given if the second and third cards were in the correct sequence (0-36 points). The questionnaire score consisted of scores obtained from the 23 points pertaining to the mental states of the cartoon characters that were given (e.g., "What does the blond haired person believe is in the box," "What does the shopgirl now think the boys intended to do?").This task yielded a sequencing score (0-36) on logical reasoning and a questionnaire score (0-23) on the comprehension of first-/second-and third-order belief, as well as false belief, reality, reciprocity, deception, and cheating detection. The overall ToM score was calculated as the sum of the sequencing and questionnaire scores (0-59 points) (for further details29).

Procedures

Each subject, when performing the Theory of Mind Picture Stories task, was assessed by a psychiatrist (LSB) and a psychologist (LMK, KSJ), followed by the completion of computerized SPM and the facial emotion recognition task in a controlled laboratory. All tasks took approximately 50 minutes to complete.

Statistical analysis

In the present study, all scores were screened for outliers, using Tukey's hinges and, if possible, outliers were included after a winsorizing procedure.30 Following data screening, the skewness and kurtosis of all rated scores were in an acceptable range (<1.0).

Multiple regression was used to examine the hypothesized variables' ability to predict the overall hit rate (%) of the emotion recognition task. The enter method was utilized in the multiple regression model. The mediation procedure suggested by Baron and Kenny31 was employed to examine whether the overall ToM score acts as a mediator in the relationship between performance on the facial emotion recognition task and SPM scores. Three sequential regressions were tested: 1) According to the test that analogical reasoning ability explains the variance in ToM, scores on the Theory of Mind Picture Stories task were found to regress on SPM scores (equation 1 in Figure 1). 2) Facial emotion recognition was regressed on analogical reasoning ability, testing whether SPM scores explain the variance in facial emotion recognition (equation 2 in Figure 1). 3) When analogical reasoning ability was included in the multiple regression model, ToM still explained the variance in facial emotion recognition through a test showing that facial emotion recognition was regressed on scores for both ToM and SPM (equations 2 and 3 in Figure 1). We also conducted Sobel's test32 by using unstandardized regression coefficients and standard errors in order to evaluate the significance of the indirect effect when the mediator is added to the model. Multicollinearity was not a concern for any of the variables that were included in the analysis (highest variance inflation factor=1.07).

RESULTS

Performance on the facial emotion recognition task, analogical reasoning, and ToM task

Scores on each task are summarized in Table 1.

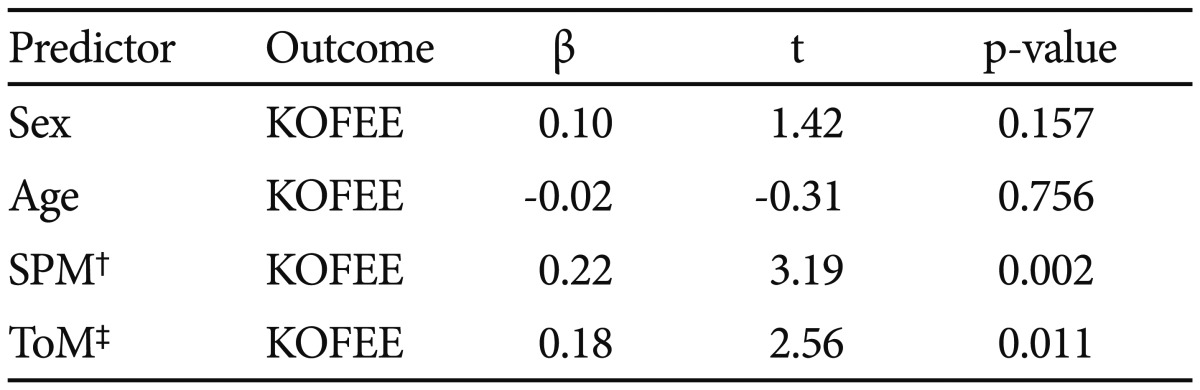

Predictors of facial emotion recognition

Multiple linear regression analysis was performed where the total hit rate (%) on the emotion recognition task was entered as an outcome variable, and age, sex, SPM score, and the overall ToM score were entered as predictor variables. The overall model was significant [R2=0.10, F (4,195)=5.57, p<0.001]. SPM scores (t=3.19, p=0.002, β=0.22) and overall ToM scores (t=2.56, p=0.011, β=0.18) were both significant predictors. The main results are summarized in Table 2.

Mediation analysis

Baron and Kenny's31 approach was employed to determine whether ToM mediates the significant relationship between SPM score and performance on the facial emotion recognition task (Table 3). We also conducted a three-step hierarchical regression analysis to determine whether overall performance on the ToM task mediates the relationship between SPM score and performance on the facial emotion recognition task. In the first step (equation 1), the linear regression analysis revealed that the SPM score was significantly related to the overall ToM score (t=2.94, df=198, p=0.004, β=0.20). In the second step (equation 2), it was also found that the SPM score significantly predicted the total hit rate on the emotion recognition task (t=3.67, df=198, p<0.001, β=0.25). When the overall ToM score was added in the third step (equation 3), it was significantly associated with the total hit rate on the facial emotion recognition task (t=2.46, df=197, p=0.015, β=0.17), and the SPM score became a weaker predictor than it was in the second step (t=3.14, df=197, p=0.002, β=0.22). In addition, Sobel's test revealed a significant indirect effect of the overall SPM score on the total hit rate on the facial emotion recognition task when the overall ToM score was added to the model (Z=2.14, p=0.034). Thus, ToM may partially mediate the relationship between SPM and performance on the facial emotion recognition.

DISCUSSION

To the best of our knowledge, this is the first study to investigate the role of ToM as a mediator of the relationship between reasoning ability and facial emotion recognition on with a large sample (n=200) of healthy subjects. The main finding from our study is that reasoning ability and ToM skills are independent predictors of facial emotion recognition. More importantly, ToM was found to partially mediate the relationship between reasoning ability and facial emotion recognition.

Analogical reasoning (as measured by SPM) was independently related to facial emotion recognition. This association is essentially consistent with previous findings that the executive function of abstraction and verbal fluency in healthy controls5 correlates with facial emotion recognition ability. Analogical reasoning indexed by SPM requires the integration of relations, as well as the ability to infer rules and form abstractions on the basis of non-social information, which is in turn closely associated with the executive function of the prefrontal cortex.25,33 A possible explanation is that when subjects recognize others' facial emotion photographs at their own pace, analogical reasoning ability, which is based on the information of figural matrices, may be needed to decode the complex visuospatial stimuli, as well as integrate and infer the emotional status of "posers," or models posing with different facial emotional expressions.

An association between ToM skills and facial emotion recognition was also found. This finding is explained in terms of the relationships between the five proposed core constructs of social and emotional behavior.11 According to this model, higher-level ToM skills may be involved during attempts to understand the meaning of a social-affective stimulus such as facial emotions. Alternately, and more specifically, these findings suggest the role of false-belief and perspective-taking, which are a part of ToM skills, as it is essential for subjects to know that the poser's emotional state in the photograph was not evoked by the subject himself or herself, despite being in front of the poser's face. This formulation may be robust for faces with negative emotions such as fear, since subjects with poor false-belief ToM skills and no intention to harm others may not easily understand the poser's expression of fear (thinking "Why is he/she afraid of me? I am innocent.") and therefore do not select "fear" (thinking "His/her expression should not show fear because there is no reason for him/her to be afraid of me"), while subjects with intact ToM skills and no intention to harm others would know that the poser's expression of fear is not elicited by them (thinking "Why is he/she afraid? It is not my fault, because I was not front of him/her when the photograph was taken, although at present I am in front of his/her face in the photograph."), and thus choose "fear" in the response sheet ("His/her expression should be fear, although I do not know the details of the situation that made him/her afraid.").

With regard to the influence of ToM skills on the relations between higher neurocognition and facial emotion recognition, ToM skills seemingly acted as a partial mediator of the relationship between analogical reasoning and facial emotion recognition. High-order neurocognitive processing of inference and abstraction, indexed by SPM using non-social figural matrices, may also be a necessary component of ToM skills, which include logical sequencing and comprehension of false-belief, reciprocity, deception, and detection of cheating. In our study, the facial emotion recognition task may require higher-level neurocognitive functioning such as analogical reasoning, so that subjects can label the internal experience of the poser in the photograph, on the basis of surface information of complex visuospatial features evident in the poser's facial expression and the generated concept of the internal space of both the subject and the poser, which simultaneously capture the perspective-taking of ToM skills and reasoning ability. Therefore, analogical reasoning may not only directly contribute towards facial emotion recognition, but also influence ToM skills, which in turn influence facial emotion recognition.

A limitation of our study is that the measurement of neurocognitive functioning was restricted to one higher neurocognitive function, namely, analogical reasoning ability (SPM). There is more than one way to assess neurocognitive functioning; arrange of assessments could have therefore offered a more comprehensive set of results. We assessed only higher-level ToM skills for the social domain, but not low-level mental state inferences, or the index of lower-level self-boundary and embodied simulation. In future, more comprehensive assessments of neurocognitive, as well as higher and lower-level of ToM skills measurement, will be required to explain the role of ToM skills as a mediator of the relationship between neurocognitive functions and facial emotion recognition.

In summary, we found that reasoning ability and ToM were independent predictors of facial emotion recognition in healthy people. More importantly, ToM was found to partially mediate the relationship between reasoning ability and facial emotion recognition. These findings imply that higher neurocognitive functions, including reasoning, may not only directly contribute towards facial emotion recognition but also influence ToM, which in turn influences facial emotion recognition. These findings also highlight the need for further research to explore the mechanisms underlying deficits in facial emotion recognition, with respect to ToM and neurocognition among individuals with psychiatric illnesses such as schizophrenia and those at an ultra-high risk for psychosis.

Acknowledgments

We would like to express our deep appreciation to Professor Martin Brüne for kindly permitting us to use the Theory of Mind Picture Stories task.

This study was supported by a grant from the National Research Foundation of Korea (NRF), funded by the Korean Government (MSIP) (No. 2010-0024264) and (No. 2010-0026833).