Korean Facial Emotion Recognition Tasks for Schizophrenia Research

Article information

Abstract

Objective

Despite the fact that facial emotion recognition (FER) tasks using Western faces should be applied with caution to non-Western participants or patients, there are few psychometrically sound and validated FER tasks featuring Easterners' facial expressions for emotions. Thus, we aimed to develop and establish the psychometric properties of the Korean Facial Emotion Identification Task (K-FEIT) and the Korean Facial Emotion Discrimination Task (K-FEDT) for individuals with schizophrenia.

Methods

The K-FEIT and K-FEDT were administered to 42 Korean individuals with schizophrenia to evaluate their psychometric properties. To test the convergent and divergent validities, the Social Behavior Sequencing Task (SBST) and hinting task were administered as social-cognitive measures, and the Trail Making Test (TMT)-A and -B were administered as neurocognitive measures.

Results

Average accuracy on the K-FEIT and K-FEDT were 63% and 74%, respectively, and internal consistencies of the K-FEIT and K-FEDT were 0.82 and 0.95, respectively. The K-FEIT and K-FEDT were significantly correlated with SBST and Hinting Task, but not with TMT-A and B.

Conclusion

Following replication studies in a larger sample, the K-FEIT and K-FEDT are expected to facilitate future studies targeting facial emotion recognition in schizophrenia in Korea. Limitations and directions for future research are discussed.

INTRODUCTION

Facial emotion recognition (FER) is critical to understanding interpersonal difficulties in individuals with schizophrenia.1,2,3,4,5 To evaluate the nature of FER deficits and potential benefits of treatment approaches, it is necessary to measure FER deficits with psychometrically valid (i.e., reliable and valid) tasks.

Although it is generally accepted that FER is universal,6,7 consistent cultural and racial differences in FER have been reported.8 For instance, Levin8 found poor FER for other-race faces. The author speculated that people might tend to focus on race information at the expense of individuating information in other-race face recognition. In addition, a recent meta-analytic study supported the existence of a cross-race effect in FER.9 The authors reported that FER accuracy was higher when individuals recognized emotions expressed by people of the same race. Because there is a controversy regarding whether FER is universal, any confounding effects of poser race should be controlled, and the role of poser race should be investigated with a set of stimuli that includes facial emotions expressed by both same- and other-race posers. If there is cross-race effect in FER, using foreign face stimuli could seriously undermine the validity of FER tasks. Because of growing awareness of cultural specificity in FER among researchers in Korea, there have been extensive efforts to develop databases of facial emotion stimuli with Korean posers.

Lee et al.10 and colleagues were the first to develop a comprehensive FER set of 6125 facial emotion stimuli (i.e., Korea University Facial Expression Collection, KUFEC) using 49 Korean posers (24 male and 25 female, age range 20-35 years). The KUFEC includes facial emotion stimuli depicting neutral and six basic emotion expressions, and preference for and valence of these stimuli were evaluated.11,12 Park et al.13 developed another FER set which is made up 176 expressions, using 15 Korean posers (7 males and 8 females). Most recently, the Extended ChaeLee Korean Facial Expressions of Emotions, which consists of 259 facial expression stimuli created using 37 actors (16 male, 21 female, age range 26-60 years), was standardized.14 These sets of Korean facial emotion stimuli have been extensively used in basic laboratory research in Korea over the past decade.15,16,17,18,19,20

Despite the development of valid Korean facial emotion stimuli, progress in clinical outcome studies targeting FER remains restrained by the lack of psychometrically valid FER tasks with Korean facial emotion stimuli.21,22,23 In other words, detecting treatment benefits of cognitive remediation for FER deficits in Korean samples requires the development of a brief but psychometrically reliable and valid FER task. Thus, it is essential to develop an FER task with valid Korean FER stimuli selected from the databases described above, and to evaluate its psychometric properties in Korean samples.

Two types of FER tasks were considered in the development of a Korean FER task: facial emotion identification (e.g., identifying a single facial emotion), and facial emotion discrimination (e.g., deciding whether two facial emotions expressed by different posers are the same or different). Discrimination requires decoding facial cues from two different stimuli, and identification requires both decoding and labeling facial emotions.1 Previous studies reported that both facial emotion identification and discrimination are impaired in schizophrenia.2,24

With these findings in mind, the current study aimed to develop two novel Korean FER tasks (i.e., facial emotion identification and differentiation tasks) using valid Korean facial emotion stimuli, and to evaluate their psychometric properties. We hypothesized that the novel Korean FER tasks would demonstrate greater internal consistency in Korean patients with schizophrenia than the Western FER tasks, and the novel Korean FER tasks would have greater convergent and discriminant validities than the Western FER tasks. In other words, the novel Korean FER tasks would be more strongly correlated with measures of social cognition, but not with neurocognition which is known to be distinct from social cognition, compared to the Western FER tasks.

METHODS

Study procedure

We used the Korea University Facial Expression Collection (KUFEC).10 In comparison with other Korean facial stimuli sets (e.g., Korean Facial Expressions of Emotion and Extended ChaeLee Korean Facial Expressions of Emotions),13,14 the KUFEC was selected because it offers a greater number of facial stimuli (KU-FEC=6126) and was reported ratings of valence and arousal levels for each stimulus, which allowed for the examination of multiple aspects of a stimulus. Developing an FER task for sc-hizophrenia research involved three steps. First, frontal facial stimuli (392 stimuli depicting facial emotions expressed by 25 Korean men and 24 Korean women) from the KUFEC were carefully reviewed and selected by four graduate-level experts who were trained on the Ekman Facial Emotion Scoring Technique.25 The training focused on detailed facial characteristics for pleasure, surprise, fear, sadness, disgust, and anger (e.g., the inner ends of eyebrows are raised and the outer edges of lips are dropped when people are sad). The clarity and intensity of the facial emotion expressed in each stimulus were coded by these experts. In Step 1, 56 stimuli that were rated high on clarity (mean clarity=2/2) and intensity (mean intensity=5.7/6) were selected following the procedure in Ebner et al.26 Then, using Kerr and Neale's paradigm,1 facial emotion identification and discrimination tasks were developed using the selected stimuli from Step 1, and were administered to 83 non-trained college students. Inter-rater reliability of 56 stimuli among college students was 0.99. For the Korean Facial Emotion Identification Task (K-FEIT), participants were asked to identify the emotion expressed in each face stimulus from among seven emotions and then to rate the intensity of the facial expression on a 7-point Likert scale (1=feel nothing, 7=feel strongly). For the Facial Emotion Discrimination Task (K-FEDT), two face stimuli were presented and participants were asked to judge whether the two stimuli were expressing the same or different emotions. Because previous studies have indicated that women are more expressive than men in terms of their facial expressions,27,28 female faces were always presented with other female faces to control for potential gender effects. For the K-FEDT, 196 pairs of stimuli were presented (28 pairs expressed the same emotion and 168 pairs expressed different emotions). Both K-FEIT and K-FEDT were created using E-Prime, and the stimuli were presented in a random order. In Step 2, respondents' accuracy rates (i.e., top four among each emotion) were used to select stimuli, resulting in a total of 28 stimuli for the K-FEIT and 98 pairs of stimuli for the K-FEDT for use in Step 3 (current study). Mean accuracy of 28 selected stimuli among college students was 0.82.Mean accuracies for the happiness, anger, fear, no emotion, sadness, surprise and disgust were 1.00, 0.99, 0.20, 0.96, 0.96, 0.95 and 0.81, respectively.

Participants

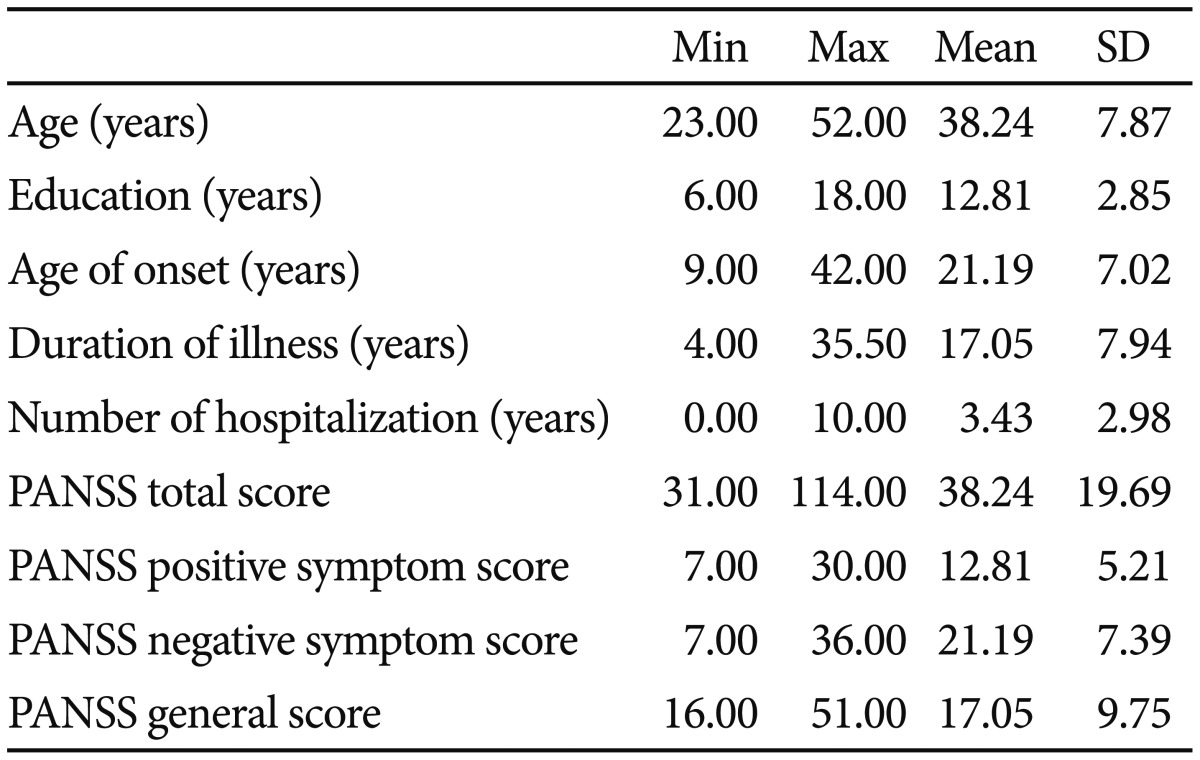

Forty-six individuals diagnosed with schizophrenia or schizoaffective disorder were recruited from community psychiatric rehabilitation centers in Seoul. Informed consent forms were provided and signed prior to participation. Those with mental retardation, neurological disorders, or other comorbid disorders were excluded from participation. Diagnoses were confirmed by trained masters-level clinicians who administered the Korean version of the Mini International Neuropsychiatric Interview.29 Forty-two individuals (28 males and 14 females) completed all the assessments. Thirty individuals were taking antipsychotic medication, distributed as follows: risperidone (33%), clozapine (26%), olanzapine (9%), haloperidol (7%), fluoxetine (4%), aripiprazole (4%), and chlorpromazine (4%). Demographic and symptom severity information is shown in Table 1.

Measures

Korean Facial Emotion Identification Task

As described above, we made a Korean emotion identification task using 28 Korean facial emotion stimuli. Stimuli were presented in a random order. Participants were required to identify the emotion presented in the stimulus (e.g., happiness, sadness, anger, fear, surprise, no emotion, or disgust). Specifically, an instruction saying "Please choose the emotion which person in the picture is feeling" was given before a facial stimulus was presented. Stimuli remained on the screen until participants responded, and there was no time limit. The answer sheet was "1. Happiness, 2. Anger, 3. Fear, 4. No emotion, 5. Sadness, 6. Surprise, 7. Disgust". The task was made using E-Prime 2.0.

Korean Facial Emotion Discrimination Task

We used 98 pairs of facial emotion stimuli in the Korean emotion-discrimination task. Each pair depicted the same or different emotions, and pairs were presented in a random order. To be specific, an instruction saying 'Please choose whether the emotions which two people are feeling are the same or not' was given before a facial stimulus was presented. The answer sheet consisted of the following options: '1. Same emotion, 2. Different emotion'. Stimuli remained on the screen until participants responded, as there was no time limit. The task was made using E-Prime 2.0.

Facial Emotion Identification Task

We compared the K-FEIT with the Facial Emotion Identification Task (FEIT). The FEIT uses grayscale Western face photographs from 19 individuals (10 males and 9 females). Each stimulus depicts one of the six emotions (happiness, sadness, anger, surprise, fear, or shame), and stimuli are presented in a random order. Instructions were same with those of the K-FEIT. However, the answer sheet for the first instruction was different with that of K-FEIT, as it consisted of the following response options: '1. Happiness, 2. Anger, 3. Fear, 4. Sadness, 5. Surprise, 6. Shame'. Unlike the original FEIT, stimuli remained on the screen until a response was made, and there was no time limit.

Social cognition

The Social Behavior Sequencing Task (SBST) was used to test social knowledge. SBST has excellent internal consistency and reliability (Cronbach's alpha=0.87) in Korean samples with schizophrenia.30 Theory of Mind (TOM) was assessed using the Hinting Task developed by Corcoran.31 Both measures have been used to investigate social cognition in schizophrenia.

Neurocognition

The Trail Making Test A and B (TMT)-A and B were used as brief neurocognitive measures to examine basic attention and executive function.

Premorbid intelligence

The vocabulary and picture completion subtests of the We-chsler Adult Intelligence Scale-Third Edition were used to estimate premorbid intelligence. Premorbid IQ was calculated using the Oklahoma Premorbid Intelligence Estimation formula (OPIE).32 OPIE is useful for predicting premorbid intelligence in various clinical samples such as dementia, epilepsy, and schizophrenia.33 Both subtests have excellent internal consistency and reliability (Cronbach's alpha=0.94, 0.88, respectively).34

Data analysis

The Cronbach's alpha coefficients were used to evaluate the internal consistency and reliability of the K-FEIT and K-FEDT. The Pearson's correlation coefficients were computed to evaluate the relationship between K-FEIT, K-FEDT, neurocognition, and social cognition. All analyses were conducted using SPSS 21.0.

RESULTS

Descriptive item statistics and reliability

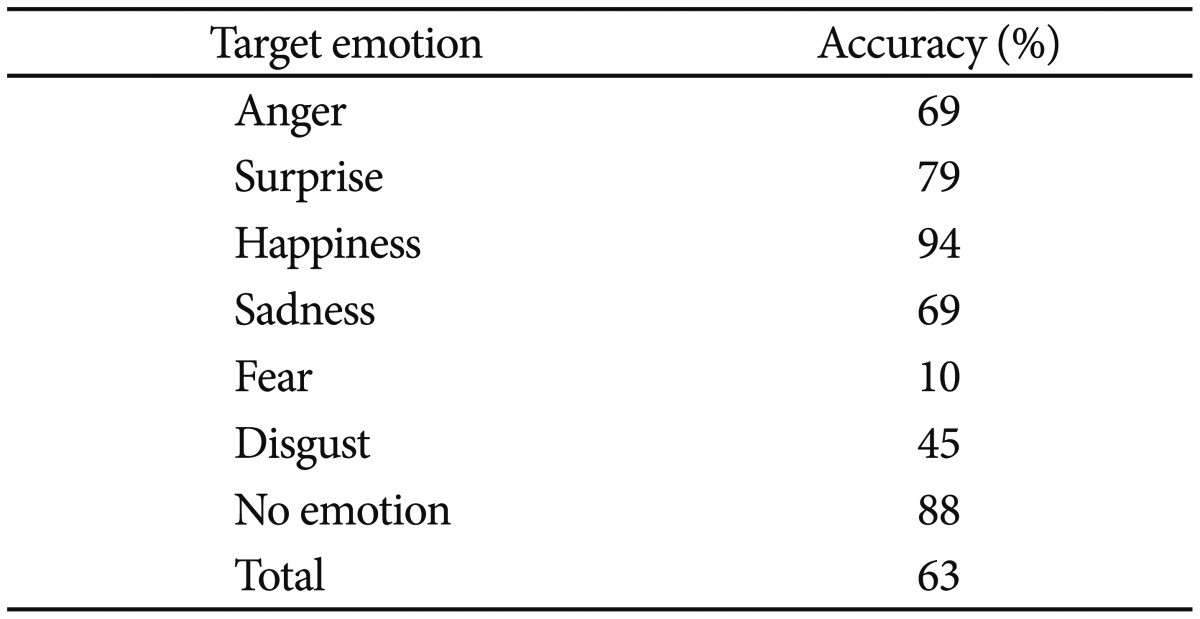

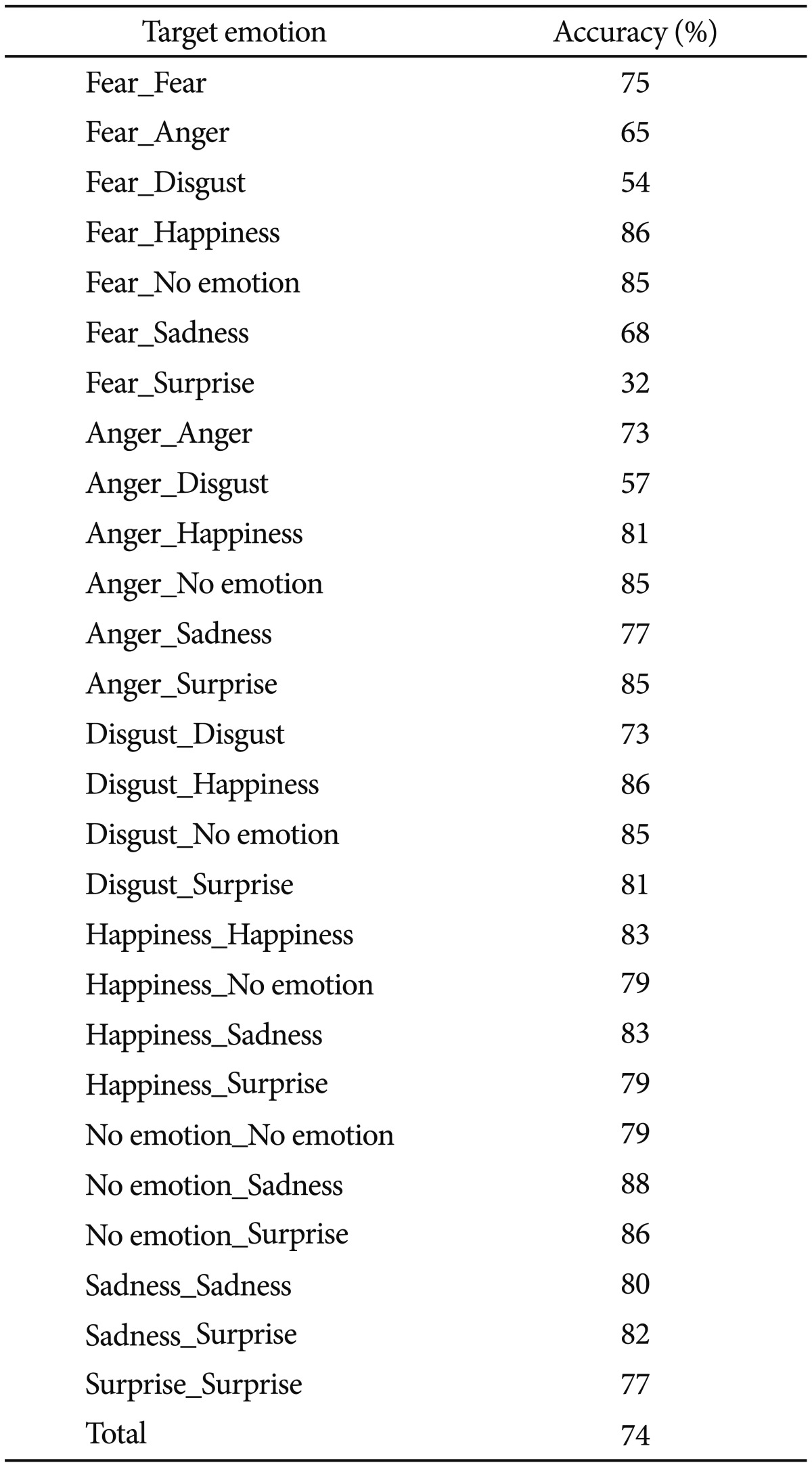

Descriptive statistics for the K-FEIT are shown in Table 2 and 3. Average accuracy on the K-FEIT was 63% (ranging from 10% for fear to 94% for happiness). Average accuracy on the K-FEDT was 74% (ranging from 32% for fear-surprise pairs to 88% for neutral-sad pairs).

The Cronbach's alpha coefficients for the K-FEIT and K-FEDT were 0.82 and 0.95, respectively.

Validity

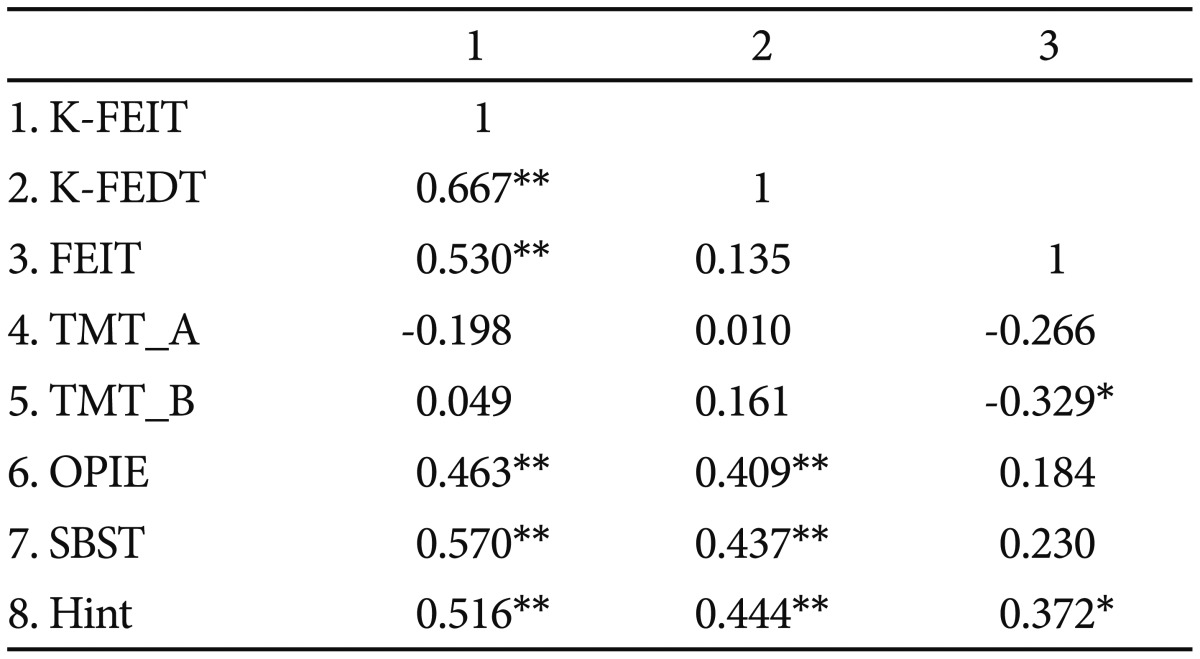

Both K-FEIT and K-FEDT scores were moderately correlated with social cognition measures (Table 4). K-FEIT scores were positively correlated with SBST (r=0.590, p<0.001) and Hinting Task (r=0.506, p<0.005) scores. K-FEDT scores were positively correlated with SBST (r=0.508, p<0.005) and Hinting Task (r=0.466, p<0.005) scores. Neither the K-FEIT nor K-FEDT were correlated with neurocognitive measures (i.e., TMT-A or -B), and both were positively correlated with premorbid intelligence (r=0.463, p<0.005; r=0.409, p<0.05, respectively). There was a moderate correlation between K-FEIT and FEIT scores (r=0.464, p<0.005).

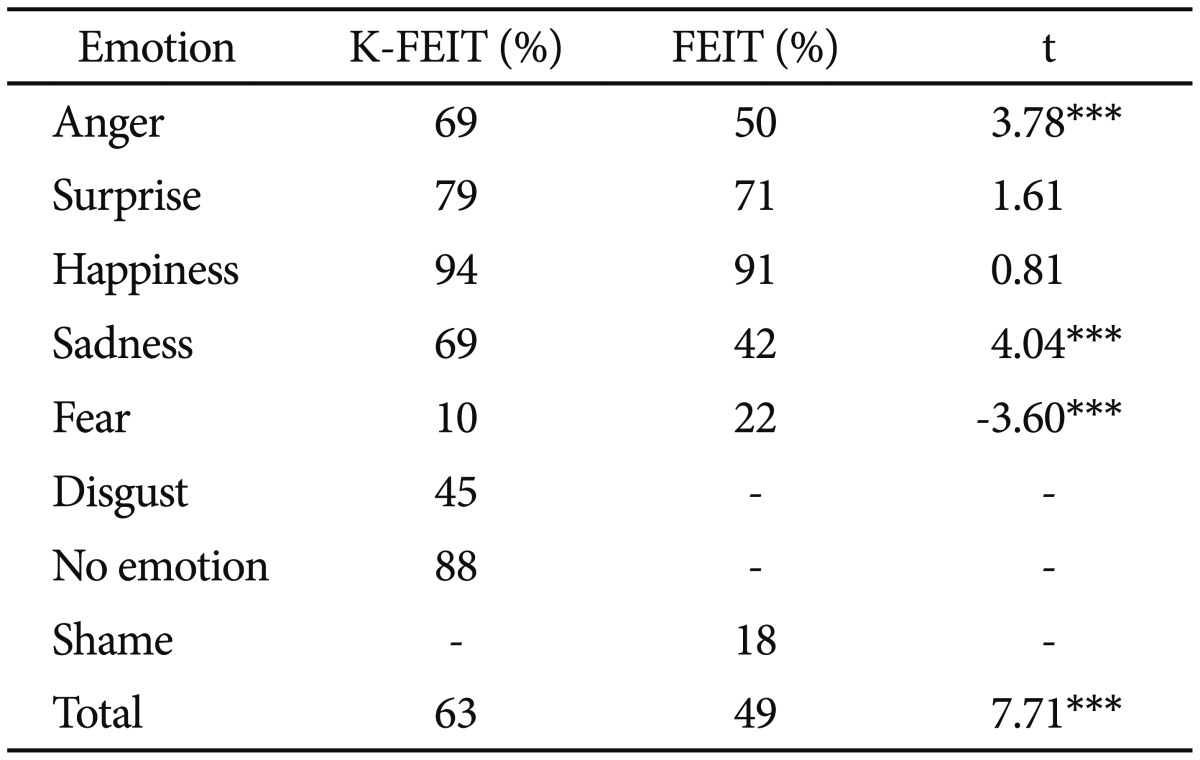

Comparison of the K-FEIT and FEIT

Comparison of the FEIT and K-FEIT revealed higher accuracy for the K-FEIT in all emotion categories, except fear (Table 5). In both tasks, accuracy was highest for happiness (K-FEIT=94%, FEIT=91%), followed by surprise (K-FEIT=79%, FEIT=71%), and accuracy was similar for sadness and anger (K-FEIT sadness=69%, K-FEIT anger=69%; FEIT sadness=42%, FEIT anger=50%). Unlike the K-FEIT, FEIT scores were not correlat-ed with K-FEDT or SBST scores. Although FEIT performance was positively correlated with performance on the Hinting Task (r=0.372, p=0.015), the correlation was smaller than that observed with the K-FEIT.

DISCUSSION

Our study aimed to develop and validate Korean FER tasks for schizophrenia research. To our knowledge, the K-FEIT and K-FEDT are the first validated FER tasks that use Korean facial stimuli. The results showed that the K-FEIT and K-KEDT had good internal reliability, and convergent and discriminant validity. As hypothesized, the K-FEIT and the K-FEDT showed greater reliability compared to the FEIT, which uses Western posers, in Korean patients with schizophrenia. The internal consistency of the K-FEIT in the current study was also higher than that reported in a previous study using the FEIT in Korean samples (0.52 and 0.41 for acute-care and extended-care patients, respectively).35

Consistent with our hypothesis, the K-FEIT and K-FEDT showed significant positive correlations with social cognitive measures, including the SBST and Hinting Task, but not with neurocognitive tasks. The non-significant relationships between our FER tasks and neurocognitive tasks are somewhat in conflict with previous studies that found significant relationships between FER and the Wisconsin Card Sorting Task, Degraded-Stimulus Continuous Performance Test, Letter-Number Span Test, and WMS-R figure memory.36,37,38,39 However, in accordance with our findings, other studies have reported non-significant relationships between facial emotion recognition and performance on the TMT-A and -B and the Continuous Performance Test (CPT).39,40 Nevertheless, Oh et al.41 reported that there were significant correlations between facial emotion recognition and performance on the TMT-A and -B. These inconsistent results may be due to several reasons. First, it may be due to the difference in characteristics of the participants. Participants in the current study had less severe PANSS scores than those of Oh et al.'s participants. Second, the discrepancy may result from differences in task procedures (e.g., whether or not questions were presented simultaneously with the facial stimuli). These findings suggest that FER task performance may not be related to attention, processing speed, or attention shifts measured by the TMT-A and -B. However, it should be noted that although the current findings were consistent with previous studies,39,40 due to a small sample size and a limited range of measures, the current findings should be replicated in a larger sample with comprehensive neurocognitive measures.

The relatively strong correlations between performance on the FER tasks and other social cognitive tasks have also been reported in previous studies, suggesting that the new Korean FER tasks have concurrent validity as a subdomain of social cognition.36,42,43 In addition, K-FEIT performance was moderately correlated with FEIT performance and strongly correlated with K-FEDT performance. These results are consistent with previous studies showing that emotion identification (i.e., FEIT) and emotion discrimination (i.e., FEDT) are closely related.1 In sum, the current findings provide additional evidence for the convergent and discriminant validity of the new Korean FER tasks.

Participants with schizophrenia achieved higher accuracy on the K-FEIT than the FEIT for all emotions except fear. This is consistent with the cross-race effect.8,9 In other words, Korean participants with schizophrenia appear to be more sensitive to Korean posers' facial emotions (used in the K-FEIT and K-FEDT) than Western posers' facial emotions (used in the FEIT).

Consistent with previous studies,44,45,46,47,48,49 our results also showed that individuals with schizophrenia achieved different levels of performance depending on the valence (i.e., negative vs. positive emotions) of the facial emotions. Participants in this study showed generally high accuracy, although still low compared with that normal sample, for positive and neutral facial emotions, with the highest accuracy for happiness, followed by neutral and surprise. In contrast, participants had lower accuracy for the negative facial emotions, with 69% accuracy for sadness and anger, and less than 50% accuracy for disgust and fear, although accuracy of the fear in normal sample was also low. There are several possible reasons for these results, including aberrant neural processing for recognition of negative emotions.46 Lower accuracy on negative facial emotions in Korean patients with schizophrenia is consistent with previous findings in schizophrenic samples from various ethnic groups, supporting the argument that difficulty perceiving negative facial emotion is a universal cognitive marker of schizophrenia.49 However, it is still necessary to replicate the current findings in a future study that compares the emotion recognition/discrimination performance in both a sample of individuals with schizophrenia and those without schizophrenia.

Interestingly, the mean accuracy for fearful facial stimuli was particularly low on the K-FEIT (10%) compared to other negative facial emotions in the K-FEIT and Western fearful facial stimuli in the FEIT. A similar pattern was reported in another study of FER in a Korean sample with schizophrenia. In this study, the accuracy of fear stimuli identification was noticeably lower among 7 emotions in both the patients with schizophrenia and in the normal control group.50 Subsequent analyses revealed that low accuracy for the K-FEIT fear stimuli was largely due to misattributing fear for surprise (54% of participants responded "surprise" on fearful face trials). On the K-FEDT, accuracy was also lower for fear-surprise pairs than other pairs. In addition, 51% of participants gave "surprise" responses for fear stimuli in the FEIT as well, suggesting a systematic confounding influence of surprise on fear perception in Korean samples with schizophrenia. This pattern was also reported in a study of Korean participants without schizophrenia.14 In that study, 43% of healthy participants perceived surprise in fearful facial emotion stimuli, suggesting that Koreans may be less sensitive to fearful facial emotion than Westerners. Another possible explanation for lower accuracy for fearful facial expressions is that the fearful stimuli in the K-FEIT and K-FEDT were low-salience. Koreans might experience fear relatively rarely and/or there may be different cultural norms that require restraint in emotional expression. Chiao et al.51 reported that both Japanese and Caucasian participants performed relatively worse recognizing fearful facial emotions in Japanese versus Caucasian posers. Whether lower accuracy for fearful facial expressions was related to lower sensitivity to fearful facial expressions or less salient Korean fearful facial expressions should be examined in future studies with a larger sample and systematic experimental controls.

Some limitations of this study should be noted. First, we had a relatively small sample size. Although our FER tasks showed quite good reliability and validity, and the correlation patterns with social and neurocognitive measures were generally in accord with prior findings in terms of magnitude and direction, our results should be replicated in another study using a larger sample. Second, antipsychotic medications were not controlled in this study. Given that study participants had remained stable on their prescribed medications for the prior 60 days and a recent meta-analytic study reported that medication was not associated with facial-emotion perception in patients with schizophrenia, the findings are unlikely to be affected by participant medications. However, in a future study, the current findings should be replicated after controlling for potential medication effects. Third, limited neurocognitive measures (i.e., TMT-A and -B) were used. We selected these two tasks to examine discriminant validity based on a previous study that showed that these tasks were not related to FER.40 Lastly, KUFEC from which we selected facial stimuli cannot be said to be made strictly following the Ekman system, which require us to make additional ratings based on the Ekman system.

Despite these limitations, the current study is the first to develop FER tasks with Korean posers, and to validate these tasks in Korean samples with schizophrenia. We conclude that the new Korean FER tasks (K-FEIT and K-FEDT) appear to be reliable and valid measures for use in schizophrenia research in Korea. It is expected that these tasks will facilitate facial affect perception research and intervention outcome trials targeting FER deficits in individuals with schizophrenia in Korea.