Correlations Between Psychological Status and Perception of Facial Expression

Article information

Abstract

Objective

Facial affect recognition is associated with neuropsychological status and psychiatric diseases. We hypothesized that facial affect recognition is associated with psychological status and perception of other affects.

Methods

A total of 80 images depicting facial affect, including 20 Neutral, 20 Angry, 20 Fear, and 20 Sad, were screened for use in our research. A total of 100 healthy individuals were asked to rate these images using a 10-point Likert scale and complete psychological scales assessing the emotional statuses and cognitive functions.

Results

The participants’ emotional state of aggression, attention, and impulsivity may have been associated with their interpretation of the Angry facial expressions. The participants often rated the Angry facial expressions as Fear. The participants rated Fear images as Angry or Sad. In response to a Sad facial expression, the participants reported psychological statuses of attention and impulsivity which were associated with the facial expression rating. The participants rated the Sad expression as Angry or Fear.

Conclusion

The psychological statuses of the participants were significantly correlated with their interpretation of facial affects. In particular, a psychological state of attention was often correlated with incorrect affect ratings. Attention and impulsivity could affect the rating of the sad facial expressions.

INTRODUCTION

Studies on facial affect recognition have been instrumental in gaining insights into cognition and emotion, and in influencing the design of computational models and perceptual interfaces. Such studies have been conducted for several decades [1-3]. Historically, many studies have employed six facial expressions, namely happiness, sadness, anger, disgust, fear, and surprise, when testing human emotional perception [4,5]. Other studies used more or fewer facial expressions in their studies [6,7]. Of these, the expression of happiness was recognized more easily than were other emotions [8]. In a meta-analysis of emotional expressions, McKasy [9] reported that anger did not have a significant effect on depth of information processing when compared to other emotions, including neutrality, sadness, happiness, and fear. Anger is defined as a strong unpleasant emotion due to interfering obstacles or disparaging offenses against oneself or another [10,11]. Compared to sadness, anger has a more obvious target of blame and accountability [12]. A fearful facial expression was the most salient for humans to visualize, compared to other facial expressions [13]. Based on the results of these studies, we hypothesized that the differentiation between Anger, Fear, and Sad facial expressions could provide insight into human cognition and emotions.

A facial affect recognition deficit is thought to be due to the individual emotional statuses of depression, anxiety, and aggression [1,14,15], as well as to cognitive factors of attention and impulsivity. Demenescu et al. [14]. reported that adults with anxiety disorders or major depressive disorders found it difficult to recognize facial expressions. Alharbi et al. [1]. suggested that affective factors, including depression and anxiety, could predict individual differences in emotional recognition. In a multicohort longitudinal study, Acland et al. [15]. reported that negative emotion recognition was associated with higher concurrent aggression.

In addition to emotional factors, difficulties in facial affect recognition are associated with cognitive impairments, including attention and impulsivity [16-20]. In a review of facial emotional recognition in adolescents with attention deficit hyperactivity disorder (ADHD), Dan [16] reported that adolescents with ADHD found the recognition of facial expressions difficult due to differences in their brain activity. Löytömäki et al. [17]. stated that a delay in emotional recognition in patients with ADHD is associated with the linguistic and cognitive skills required for selective intervention procedures. Faces can provide multidimensional visual stimuli and a broad range of information, including identity, gender, age, race, mood, and intentions [21]. Several studies have suggested that impulsivity could affect the response to emotional face stimuli, including happy, angry, and sad [15]. However, few studies have reported a correlation between cognitive function and emotional perception in healthy individuals. This makes our study one of the first to attempt this.

We hypothesized that facial affect recognition would be affected by participants’ emotional status, including depression, anxiety, and aggression, as well as cognitive functions, including attention and impulsivity. Additionally, one facial affect can be perceived as another facial affect influenced by individual emotional and cognitive factors.

METHODS

Participants and study procedure

Effect size was determined using Cohen’s d [22]. The effect size and power values were 0.20 and 0.95, respectively. We planned to recruit 100 participants for the analyses of this study using flyers and the web bulletin board service of Chung-Ang University. This study was approved by the Institutional Review Board of Chung-Ang University (IRB number: 1041078-202008-HRBM-231-01). All the participants provided written informed consent.

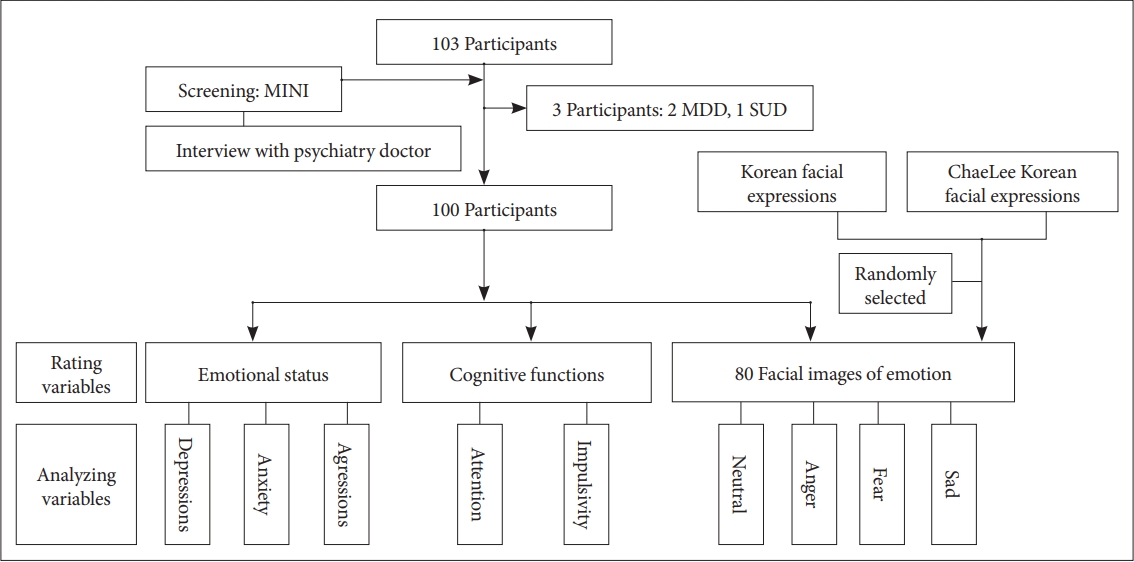

A total of 103 participants were recruited based on the following criteria: 1) the participants must be at least 18 years of age and 2) must not have a history of psychiatric diseases such as schizophrenia, other psychotic disorders, intellectual disability, mental disorders, or neurological disease. Through screening using the Mini International Neuropsychiatric Interview (MINI), and after meeting with a psychiatric doctor (DHH), three participants were excluded from the study. Two participants were excluded because of a major depressive disorder. The other participant was excluded because of substance dependence. Therefore, we used data from a total of 100 participants in the analyses (Figure 1).

Diagram for research processing. MINI, Mini International Neuropsychiatric Interview; MDD, major depressive disorder; SUD, substance use disorder.

After screening for psychiatric comorbidities and completing surveys for psychological status, all participants were asked to rate facial affects in response to images depicting facial expressions, including Neutral, Angry, Fear, and Sad.

Psychiatry comorbidity screening and psychological status assessment

Psychiatric comorbidities were screened using the Korean version of the MINI. The MINI is a semi-structured diagnostic interview that is generally used to assess the presence of co-occurring mental disorders [23,24].

Before rating the facial expression images, all participants were asked to complete psychological surveys in order to assess the emotional status of depression, anxiety, and aggression, as well as cognitive functions of attention and impulsivity (Figure 1).

Depression was assessed using the Beck Depression Inventory II (BDI-II) [25]. The BDI-II is a 21-item self-report inventory used to assess the severity of depression. Each item is rated on a 4-point Likert-type scale ranging from 0 to 3. The total score ranged from 0 to 63. The Korean version of the BDI-II has good internal consistency (Cronbach’s alpha=0.89) [26]. Anxiety symptoms were assessed using the Beck Anxiety Inventory (BAI). The BAI is a 21-item self-report inventory used to assess anxiety severity. Each item is rated on a 4-point Likerttype scale ranging from 0 to 3, with a total score ranging from 0 to 63. The Korean version of the BAI has good internal consistency (Cronbach’s alpha=0.95) [27]. Impulsivity was assessed using the Barratt Impulsiveness Scale–11 (BIS-11), which consists of 30 items rated on a 4-point scale ranging from 1 to 4 [28]. The Korean version of the BIS-11 has good internal consistency (Cronbach’s alpha=0.78) [29].

Attention problems were assessed using the Korean version of the Adult Attention Deficit/Hyperactivity Disorder Self-Report Scale (K-ASRS). The total K-ASRS score ranges from 0 (best) to 72 (worst; 34). The questions in the K-ASRS were divided into two sections: A (six questions) and B (12 questions). Four or more positive answers in Section A can indicate K-ASRS [30,31]. Aggression was measured using the Buss–Perry Aggression Questionnaire (AQ) [32]. The AQ consisted of 29 items assessing overall aggression and four sub-components assessing aggression, including physical aggression, verbal aggression, anger, and hostility. The AQ-Korean version also has good internal consistency (Cronbach’s alpha=0.87) [33].

Rating facial images of emotion

Eighty images depicting facial expressions were screened in our study. These included 20 Neutral (N), 20 Angry (A), 20 Fear (F), and 20 Sad (S) facial images. All facial affect images were randomly selected from the following four categories: neutral, angry, fear, and sad out of 176 Korean facial expressions [34] and 259 extended ChaeLee Korean facial expressions [35]. Using a 10-point Likert scale, 100 healthy individuals were asked to identify three emotions in each image depicting a facial expression. For example, participants identified anger, fear, and sadness in response to an “angry” facial affect image.

The presentation of the facial expression images consisted of 20 blocks. Each block contained four facial expressions (N, A, F, and S) with various distributions. One of the 20 facial expression images in each category was distributed into 20 blocks. The presentation order of the facial expression images in each category was distributed as follows: N-A-F-S, N-A-S-F, N-F-A-S, N-S-A-F, N-F-S-A, N-S-F-A, A-F-S-N, A-S-F-N, A-N-S-F, A-N-F-S, A-S-N-F, A-F-N-S, F-N-A-S, F-N-S-A, F-A-N-S, F-A-S-N, F-S-A-N, and S-A-F-N, A-F-S-N, S-F-A-N. Each image (5×7 cm2) was shown to the participant for three seconds. The participants rated the images for three seconds. A total of 480 seconds was required to rate all 80 images in the four categories.

If participants could not respond within three seconds they were timed out, these trials were discarded from the analyses. Participants underwent response training for 10 minutes to reduce the percentage of discarded trials. Of the 8,000 trials (80 trials in 100 participants), 38 (0.48%) were discarded as timed out in the analyses.

Data control and statistics

Linear mixed-effects models were used to estimate the effect of participants’ psychological status on the rating scores and the 95% confidence interval after adjusting for the effect of participants’ sex on the results. Subsequently, the affect of each facial expression image was compared with that of a neutral face. This served as the reference image. In addition, the rating scores for each image were fitted using the estimated coefficients of the linear mixed-effects models. All tests were two-sided and differences were considered statistically significant at a significance level of 0.05. All statistical analyses were performed using the lmer function of the lem4 package in the R software (version 3.6.3; R Foundation for Statistical Computing, Vienna, Austria).

RESULTS

Demographic and psychological characteristics of the participants

The clinical characteristics and psychological state of the participants are presented in Table 1. The sex ratios of the participants were 78.0% male and 22.0% female. The mean age of participants was 22.9±2.6 years and educational duration was 14.5±1.7 years.

Effects of psychological status on the rating of facial emotional expressions

In response to fearful facial expressions, the emotional status of aggression and cognitive function of attention were associated with participants’ ratings (Table 2). Controlling for psychological status, fearful facial expressions could be responded to as anger or sadness in the current results. The participants may interpret the images depicting Fear as Angry and Sad.

In response to the Sad facial expression, the cognitive functions of attention and impulsivity were associated with the participants’ facial affect ratings (Table 2). Controlling for psychological status, the Sad facial expression could be responded to as anger or fear emotions in the current results. The participants could interpret the images depicting Sad as Angry and Fear. Conclusively, controlling for psychological status, fearful and sad facial expressions could be interpreted as other emotions.

Fitted rating scores of facial emotion expression images

Among the neutral facial expression images, those depicting Neutral 11 had the lowest fitted rating scores, while Neutral 1 had the highest fitted rating scores in the Angry, Fear, and Sad ratings. Among the facial expression images depicting anger, the image depicting Angry 13 had the highest fitted rating score, and Angry 6 had the lowest fitted rating score in the Angry group. Among the images depicting fear, that of Fear 18 had the highest fitted rating score, and Fear 7 had the lowest fitted rating score in the Fear group. Among the images depicting sad facial expressions, Sad 2 had the highest fitted rating score, and Sad 11 had the lowest fitted rating score in the Sad group (Table 3).

DISCUSSION

In the present study, participants’ emotional mood and anxiety were not linked to the rating of facial emotional expressions. This differs from the results of previous studies [1,2,14]. Many studies have suggested that patients with depression and anxiety tend to gravitate toward depressive or anxious facial emotional expressions [1,2,14]. In a longitudinal study on recognition thresholds, Mei et al. [36] reported that individuals with subthreshold depression exhibited increased perceptual sensitivity toward sad expressions and was associated with participants’ current depressive states. Our study differs from previous studies in that we recruited healthy subjects after screening for psychiatric diseases. Previous studies recruited patients with depression, anxiety disorders, and ADHD. The emotional status of aggression could have affected the participants’ ratings of fearful expressions in the current study and was consistent with that of a previous study. Acland et al. [15] declared that negative emotions, including sadness and fear, were concurrent with an aggressive emotional status in healthy children.

The cognitive function of the participants was significantly correlated with their interpretation of the facial affects. Attention, in particular, was correlated with affect ratings. Attention and aggression levels may have affected the ratings of fearful facial expressions in the present study. Attention and impulsivity may have affected ratings of sad facial expressions.

These results are in line with those of previous studies on the correlation between facial expression and attention [37,38]. The attention mechanism is thought to play a crucial role in human emotion perception, including feature extraction and artifact removal [39]. The saliency and meaning of facial emotional expressions can facilitate conscious perception in healthy subjects [40].

Additionally, the emotional and motivational value of social signals derived from facial expressions may be associated with the attention system [41]. Faces were thought to be regarded as special objects containing social significance, such as innate salience [42]. In the competition of several facial emotional expressions, fearful expressions with a sensory advantage were most salient to human vision [6]. Bertini and Làdavas [43] suggested that fear-related signals should be prioritized in the visual system. In a previous systematic review, fear was the facial expression that patients with ADHD were least likely to recognize [44]. Pessoa et al. [13] stated that fear facial expressions would be more salient to human vision than are other facial expressions.

The core deficits of facial expression recognition in ADHD might be caused by a failure to correctly interpret affects due to inattention or impulsivity [37]. Deficits in sustained attention and inhibition in ADHD are thought to dysregulate emotional facial perception processing [45]. In fact, aggression and impulsivity were associated with Fear and Sad facial expressions in the present study. In a review of emotional dysregulation in ADHD, van Stralen [46] stated that executive function deficits may be associated with inappropriate internalized (sadness) or externalized (aggression) emotional responses.

However, whether abnormal executive function in subjects with ADHD can cause deficits in emotional recognition remains controversial [47]. Petroni et al. [48] suggested that these two capabilities may be separate from each other at the clinical level; however, they are linked at the neural level.

In the present study, participants were more likely to interpret facial expressions as emotions that they had previously felt. However, images depicting Fear could be rated as Angry or Sad while pictures depicting a Sad facial expression could be rated as Angry or Fear. By controlling emotional status and cognitive function, healthy individuals can misinterpret facial expressions as other emotions. Shioiro et al. [49] reported the misinterpretation of emotional facial recognition: sad and anger were misinterpreted as disgust, and fear was misinterpreted as surprise. Usually, misinterpretation of facial expressions has been reported to be associated with cultural background and emotional intensity [17]. However, the present study suggests that significant misinterpretation of facial expressions could occur in the condition of the same cultural background and intensity. Based on these results, we suggest that researchers consider the participants’ psychological status, including emotional status and cognitive functions, as well as their misinterpretation of facial expressions.

The present study has several limitations. First, the small number of participants and unbalanced sex distribution are insufficient to generalize the results, although we considered them in the statistical analyses. Second, in the present study, we did not perform thorough standardized cognitive function tests to assess attention and intelligence. Future studies should include a larger number of participants, a more balanced sex distribution, and cognitive function tests.

In conclusion, our findings suggest that interpretation of facial expressions can be affected by psychological status and misinterpretation of other affects. Researchers should consider these factors when planning facial expressions studies.

Notes

Availability of Data and Material

The datasets generated or analyzed during the study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors have no potential conflicts of interest to disclose.

Author Contributions

Conceptualization: Doug Hyun Han, Young Don Son. Data curation: Sujin Bae. Formal analysis: Beom Seuk Hwang, Eunhee Rhee. Funding acquisition: Doug Hyun Han, Young Don Son. Investigation: Doug Hyun Han. Methodology: Doug Hyun Han, Sujin Bae. Project administration: Eunhee Rhee. Validation: Sujin Bae, Ji Hyun Bae. Writing—original draft: Doug Hyun Han, Young Don Son. Writing—review & editing: Doug Hyun Han, Sujin Bae.

Funding Statement

This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (NRF-2020R1A4A1019623).

Acknowledgements

We acknowledge the contributions of colleagues, institutions, and agencies that aided the efforts of the authors.