Neural Mechanism of Inferring Person's Inner Attitude towards Another Person through Observing the Facial Affect in an Emotional Context

Article information

Abstract

Objective

The goal of the present study was to identify the brain mechanism involved in the attribution of person's attitude toward another person, using facial affective pictures and pictures displaying an affectively-loaded situation.

Methods

Twenty four right-handed healthy subjects volunteered for our study. We used functional magnetic resonance imaging (MRI) to examine brain activation during attitude attribution task as compared to gender matching tasks.

Results

We identified activation in the left inferior frontal cortex, left superior temporal sulcus, and left inferior parietal lobule during the attitude attribution task, compared to the gender matching task.

Conclusion

This study suggests that mirror neuron system and ventrolateral inferior frontal cortex play a critical role in the attribution of a person's inner attitude towards another person in an emotional situation.

Introduction

Theory of mind (ToM) refers to the ability to attribute mental states, such as intentions, beliefs, desires, attitude, and so forth, to themselves and other people and thereby to understand and predict behavior.1 Although many studies have been conducted to identify neural substrate implicated in inferring other's belief, desire, or intention,2-6 little is known about the neural basis of the attribution of a person's inner attitude toward another person.

Mirror neurons have unique properties in that they discharge both during the execution of actions and during the observation of the same actions made by others.7,8 It has been known that the mirror neuron systems (MNS), such as inferior frontal cortex (BA 44/45), inferior parietal lobule, and superior temporal sulcus, allow for mental state matching between self and other, and that this matching system is involved in the mental attribution process and simulation mechanism.7,8 According to the model of embodied emotion understanding, it has been suggested that the human MNS plays a critical role in the experiential understanding of another's emotional state and ToM.9-12 From the results of these previous studies, we speculated that the embodied simulation mechanism of observed emotion by MNS might be helpful for the further simulation of an agent's inner attitude toward another person in a given emotional context.

We conducted this study to identify the brain mechanism involved in the attribution of a person's attitude toward another person, using facial affective pictures and pictures displaying an affectively-loaded situation.

Methods

Subjects

Twenty-four right-handed subjects, 13 males and 11 females, participated in the experiment. Handedness was assessed by a revised version of Annett's hand preference questionnaire.13,14 Exclusion criteria included a current or past history of psychiatric disorders, traumatic brain injury, neurological illness, alcohol or drug abuse, or relevant visual defects.

All subjects fulfilled the inclusion criteria of age 18 to 35 and more than 12 years of education. All subjects gave informed written consent after the nature of the experiment was explained.

Stimulus material, tasks, and study design

The experiment used a blocked paradigm and consisted of the attitude attribution task (experimental condition) and gender matching task (a control condition), and baseline crosshair.

To understand a person's inner attitude toward another person, the successful recognition and evaluation of facial affect is essential. However, because even the same facial affects have different meanings according to context, we should attend to and use contextual information. For example, if we observed a man who was happy to see the failure of his colleague, we would understand him as having a malevolent attitude toward his colleague. On the contrary, if we noticed someone else's happy facial affect at the success of his or her colleague, we would understand him or her as having benevolent attitude toward that person. Therefore, to examine the ability to attribute other's inner attitude correctly by observing facial affect in an emotional context, we made stimuli that are composed of two pictures. One was a picture depicting facial affect, and the other was a picture displaying an affectively-loaded situation. The pictures depicting various facial affects were selected from color photographic sets of faces that were standardized by Gur et al.15 The pictures displaying an emotionally-laden situation were selected from the International Affective Picture System.16,17

The preliminary stimuli made by the above method were presented to groups of raters (n=32) who were asked to judge whether the protagonist in the picture depicting facial affect presented in one side had a benevolent or malevolent inner attitude toward the person displayed in the picture of the other side which provided contextual information.

To prevent confusion, prior to this process, the raters were instructed that the facial affect in the former picture was expressed in the situation where the person displayed in the contextual picture was not able to notice it, and that only the rater was able to notice the facial affective expression in the third person perspective.

For the purpose of constructing a valid task, a subset of stimuli was selected based on the high degree (>90%) of concordance by the raters. This final subset, administered during the fMRI study, comprised a total of 20 pictures. In five stimuli, the person with pleasant facial affect presented on the right side had a benevolent inner attitude toward the person in the picture presented on the left side. These five stimuli represent the typical situation in which a person is happy to see another's success, happiness, or joy. In another five stimuli, though the person in the picture presented on the right side showed a pleasant facial affect, he or she had a malevolent attitude toward the person in the picture presented on the left side. These five stimuli represent the situation in which a person is happy to see another's disaster or misery. In another five stimuli, in spite of an unpleasant facial affect, the person presented on the right side has a benevolent attitude toward the person in the picture presented on the left side. These five stimuli typically represent the situation in which a person is sad to see another's disaster or misery. In five additional stimuli, the person with the unpleasant facial affect presented on the right side has a malevolent attitude to the person in the picture presented on the left side. These five stimuli typically describe the situation in which a person expresses disgusting facial affect toward another's behavior. The mean concordance rate of this final subset was 97.36±2.91%.

During the scanning, the subjects were asked to perform the same task described above, and to respond by pressing either the left button (benevolent attitude) or right button (malevolent attitude). In the gender matching task, the subjects were asked to judge whether the gender of people displayed in the two pictures was matched (left button press) or not (right button press). All subjects used right hand for responding.

The ratio (1 : 1) of benevolent versus malevolent attitude in the experimental condition was equal to the ratio of matched versus unmatched gender in the control condition to maintain the same proportion of motor responses. Facial affective pictures of the same individual were not repeated within one block. Accuracy and reaction times data were collected during the scanning session. A practice session with different stimuli was conducted prior to initiating the scan to ensure optimal performance.

In both the experimental task and control task, each stimulus was presented for 4.1 seconds, followed by a 1-second interstimulus interval. Each task block contained 20 trials of either experimental task or control task, resulting in a total of 102-second blocks. Thirty-second blocks of baseline crosshair followed each block, and each scanning session comprised four blocks (A-R-C-R or C-R-A-R, where A indicates activation condition and C indicates control condition and R indicates baseline crosshair). During the baseline crosshair block, subjects gazed at a cross (+) on the screen. Each subject underwent two scanning sessions. The presentation order was counterbalanced across subjects (A-R-C-R-C-R-A-R or C-R-A-R-A-R-C-R).

fMRI data acquisition and analysis

MRI imaging was performed with a 3.0 T imager (ISOL technology, Korea). Before imaging, a global shimming procedure, using first- and second-order shims, was performed to optimize the magnetic field over the imaging volume of interest.

Whole-brain axial T1-weighted anatomical images were acquired using an inversion recovery sequence (4 mm contiguous slices; TE, 16 ms; TR, 3,200 ms; matrix size, 256×192; FOV, 22×22 cm). During the study, echo planar functional images were acquired using a gradient echo pulse sequence (4 mm contiguous slices; TE, 35 ms; TR, 3,000 ms; matrix size, 64×64; FOV, 22×22 cm).

All image data were analyzed with Statistical Parametric Map software (SPM99, Wellcome Department of Cognitive Neurology, London, UK).

By using the first image in the time series as the reference image, movement correction was performed. The images were co-registered and resliced, and the sampling errors were adjusted (sinc interpolation). All images were normalized to Montreal Neurological Institute coordinates and smoothed with an isotropic Gaussian kernel (10 mm full-width at half-maximum). Analysis was carried out using the general linear model and a boxcar waveform convolved with a hemodynamic response function accounting for the delayed cerebral hemodynamic response after stimulus presentation. Subject-specific, low frequency drifts in signal changes were removed by a high pass filter and global signal changes were treated as a covariate of no interest. Specific effects for each voxel were tested by applying appropriate contrast to the parameter estimates for each condition, resulting in t statistic for every particular voxel. The resulting set of voxel values for each contrast constituted a statistical parametric map of the t statistics SPM{t}. A second-level group analysis was performed using a random-effects model.

Activation results are reported at p<0.005 (uncorrected) with an extent threshold of 10 contiguous voxels for a priori regions of interest: inferior frontal cortex (BA 44/45), inferior parietal lobule, superior temporal sulcus.

Next, we explored the activation of non-a priori areas. For non-a priori areas, we reported results that survive a statistical height threshold of p<0.05, corrected for multiple comparisons, with an extent threshold of 10 contiguous voxels. Because we have strong a priori hypothesis, we thought this two step approach could be justified.

Results

Behavioral data

Performance was measured by percent correct and reaction time, but because performance data for four participants, 2 males and 2 females, were unscorable due to technical failure, the analyses were done for performance data for only twenty participants. As expected, participants did not have difficulties performing the tasks. For the attitude attribution task, they averaged 95.0±3.9% correct (range 87.5-100) with reaction time averaging 1904.6±481.9 msec (range 1012.0 to 2919.0). For the gender matching task, they averaged 95.1±3.4% correct (range 87.5-100) with reaction time averaging 1650.8±264.0 msec (range 1172.0 to 2297.0). Paired t-test showed that the accuracies (t=1.15, p=0.27) were not significantly different between the two tasks. However, the reaction time was longer for the attitude attribution task than for gender matching task (t=2.89, p<0.01).

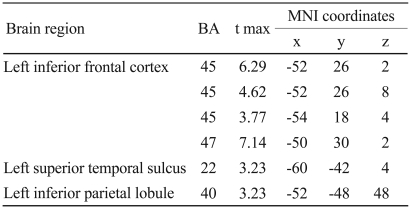

Table 1 shows the results of subtracting the control condition (gender matching task) from the experimental condition (attitude attribution task). First of all, we explored the activation of the a priori region of interest at a threshold p<0.005, and observed that the activations of the brain regions, such as the left inferior frontal cortex (BA 44/45), left superior temporal sulcus (BA 22), and left inferior parietal lobule (BA 40) were activated during the intention reading task, as compared to the gender matching task (Figure 1). Next, we explored the activation of other areas and observed the activation of left inferior frontal cortex (BA 47).

Anatomical specification, MNI coordinates (x, y, z), and maximal t scores of significantly activated voxels during attitude attribution task in contrast to control condition

Discussion

As predicted, attitude attribution towards another person elicited significant activations in the MNS. The ability to know the "why" of the other's emotional state is very important for appropriate social behavior. In particular, the ability to catch someone's inner mind toward another person, by observing facial affect in an interpersonal situation, is critically important for social survival. The attribution of inner attitude towards another person is also based on the knowledge about "why" the person expresses the emotion in that situation. We think that the MNS may be involved in this process. The MNS, located in the ventral premotor and inferior parietal region, has the unique property of discharging during both the execution of actions and during the observation of the same actions made by others.7,18 Recently, it has been suggested that the MNS plays an important role, not only in the recognition of observed action (the "what" of the action), but also in the understanding of the intention of the action (the "why" of the action).19 Parallel to this observation, we observed increased activity in the inferior frontal cortex (BA 45), the inferior parietal lobule, and the superior temporal sulcus during the attribution of another's inner attitude, that can be achieved by representing the "why" of another's emotional state. This finding suggests the possibility that the MNS is implicated in the simulating of the agent's inner mind by the embodied simulation of the agent's observed emotion.

In addition to mirror neuron area of inferior frontal cortex, we observed the activation ventrolateral part (BA 47) of inferior frontal cortex. This inferior frontal region has previously been implicated in language tasks that encourage retrieval and appraisal of relevant semantic knowledge.20-22 In addition, it has been suggested that the ventrolateral prefrontal cortex is critically involved in the ability to associate visual cues with appropriate action.23-25 These results suggest that the inferior frontal regions are involved in the retrieving of semantic information associated with social cues. In particular, the inferior frontal cortex (BA 47) is known to engage in the retrieval of emotional autobiographical memories.26 These cognitive abilities play an important role in understanding the meaning of contextual situations and predicting another's appropriate emotional response, and thereby contribute to representing the "why" of the other's emotional state.

It has been observed that the ventrolateral part of inferior frontal regions involved in retrieving semantic and autobiographic information are associated with a given social cue.

Furthermore, recent studies indicate further specialization within the left ventrolateral part of inferior frontal cortex for reconciling the ambiguous semantic content with stored knowledge.27,28 In our experimental tasks, when subjects noticed inappropriate facial affective responses to emotional context, they could resolve the disparity between their prediction and their observed response through frame-shifting, which is needed to reestablish semantic coherence. For example, when we observe a man who is happy to see the failure of his colleague, we can resolve the discrepancy between our situational expectation and information from actual observation through regarding him as having malevolent attitude toward his colleague, and thereby we reestablish semantic coherence. But, the role of ventrolateral part of inferior frontal regions in this process can be applicable to only the attitude attribution of situation-incongruent facial affect.

In this study, mean reaction times during the attitude attribution task are longer than during the control task. Therefore, we cannot clearly exclude the possibility that the difference of task difficulty may influence the activation pattern of our main result. However, when we correlated the contrasts of attitude attribution condition versus control condition, created by a fixed effect statistical model of individual levels, with subject's individual ratio of 'mean reaction time during attitude attribution condition'/'mean reaction time during control condition', we could not observe any significant correlation in the activated areas. Therefore, we suggest that the differences observed in brain activation are more likely to be associated with different types of cognitive processing rather than the difference of the task difficulty between two conditions.

The left inferior frontal cortex has been known to be involved in the verbal processing. In this study, we used visual stimuli to minimize the influence of the language processing.

Nevertheless, we cannot completely exclude the possibility that the activation of the left inferior frontal cortex in this study is influenced by the difference of required verbal processing. We could not collect the objective and direct information about the amount of verbal processing during the two conditions. We think that the difference of reaction times is the only indirect window through which we can guess the difference of required verbal processing. We think that the long reaction times may be associated with increased verbal processing. However, as described above, correlation analysis across the subjects, between neural responses to contrast of attitude attribution condition versus control condition and subject's individual ratio of 'mean reaction time during attitude attribution condition'/' mean reaction time during control condition', did not reveal significant activation in the left inferior frontal region. Therefore, we think that the possibility that the left inferior frontal activation may be the result of the difference of required verbal processing between the tasks is not so large.

Our study has some limitations in its design, in that we did not make a distinction between the attitude attribution tasks for context congruent facial affects and context incongruent ones. If we had employed an event-related design, we would have obtained more detailed information about the role of MNS and ToM processing in interactive complex socio-emotional situations. In addition, if we had used a simple emotion identification task or emotion matching task as a control task, we would have obtained more precise information about the role of MNS in representing a person's inner attitude toward another person in an emotional situation. In spite of these limitations, the results of our study will be helpful to understand the neural mechanism of higher-level ToM processing in interactive complex socio-emotional situations.

Acknowledgments

This study was supported by a grant of the Korea Health 21 R&D Project, Ministry of Health & Welfare, Republic of Korea. (A050495). This work was also supported in part by Konyang University Myunggok Research Fund of 2009.